Connecting Enterprise Data to LLMs

Introduction

Companies are rushing to bring AI into their workflows, but most systems hit the same wall: they don’t actually know your business. Out of the box, even the best chatbots can’t answer questions about your internal documents, customer history, or team-specific processes.

Most AI systems can’t access up-to-date or private information. They only know what was included in their training data, which usually stops at a certain point. In many cases, that cutoff is a year or more in the past. This means they can’t answer questions about recent changes, company-specific documents, or anything not publicly available. That’s a problem for businesses.

This is where Retrieval-Augmented Generation, or RAG, comes in. It’s a simple way to connect an AI system to your own data so it can start giving useful, accurate answers based on what’s true inside your organization, not just what’s on the public internet.

RAG is also a critical building block for AI agents—tools that can reason, take action, and support real work across your teams. If your company is thinking about AI agents, understanding RAG is a must.

This article explains what a RAG is and how it works in plain terms, walks through how it’s being used across teams like support, sales, and marketing, and breaks down the key data security, compliance, and GDPR-related questions decision makers need to address when putting these systems in place.

What is a RAG?

RAG is a system that combines two steps: retrieving relevant information and using it to generate a response. In plain terms, before answering your question, the system first looks up relevant information from a specific source of documents. Then it uses that information to generate a more accurate and useful response.

It’s like giving a chatbot a Google-like search capability for your private data. Instead of searching the whole internet, the system searches your company's documents, files, databases, or internal information sources.

This means you can ask natural questions and get precise answers based on your own proprietary data, even if that data wasn't included when the model was originally trained.

Some terms you’ll likely hear when talking about RAGs

If you're looking into RAG for your company, you’ll probably run into a few technical terms. You don't need to be an engineer to make use of RAG, but having a basic understanding of how it works can make conversations with vendors and teams a lot easier.

Here are a few terms that come up often:

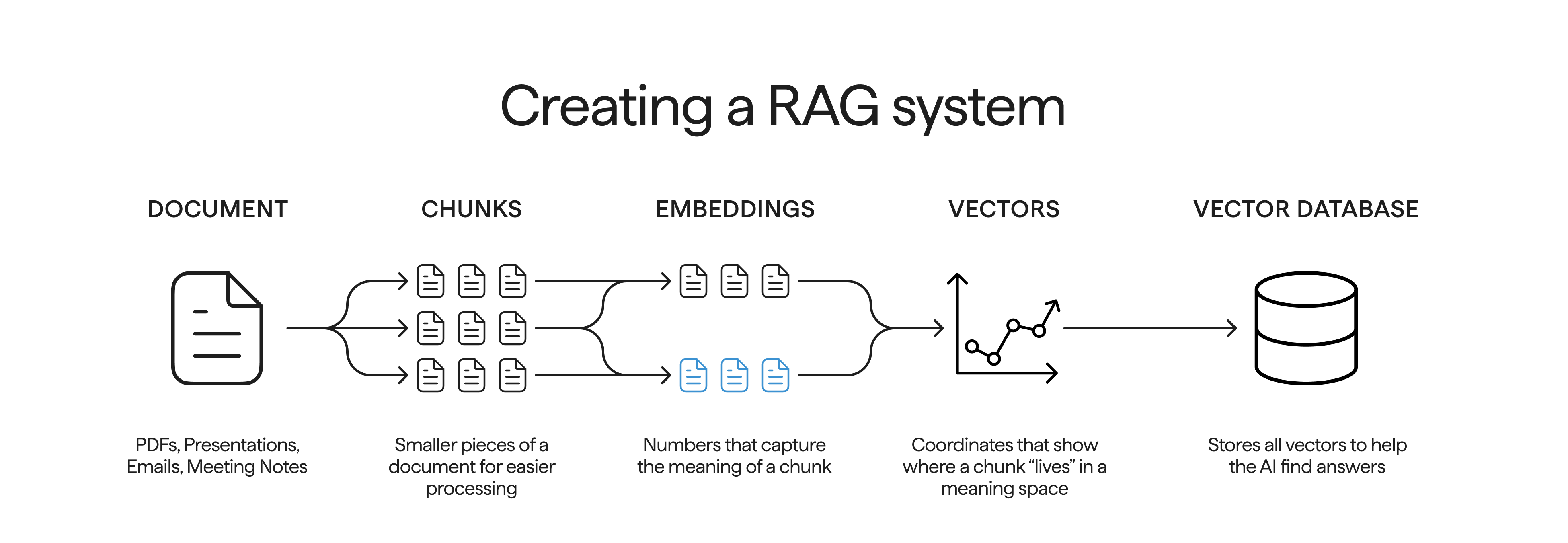

Chunks (Document Splitting)

Before your content is turned into embeddings, it’s usually split into smaller parts called chunks. Most documents, like PDFs, help center articles, or internal wikis, are too long for the AI to process all at once. So, they’re broken down into manageable pieces. Each chunk is then turned into an embedding and stored as a vector in the vector store. How you split your documents can affect how well the system finds relevant answers. If chunks are too long, the AI might miss important details. If they’re too short, the meaning might get lost. Getting this step right helps everything else — embeddings, vectors, and retrieval — work more smoothly.

Embeddings

Embeddings are a way for the system to understand what your content is about. They turn text into a series of numbers that capture the meaning of the words. For example, the sentences “How do I reset my password?” and “I can’t log in to my account” might look different on the surface, but they mean something similar. Embeddings help the system recognize that and treat them as related, even if the exact words aren’t the same.

Vectors

Once text is turned into embeddings, it becomes a vector. A vector is just a list of numbers that represent what the text means. You can think of it like coordinates on a map. Just like a map uses latitude and longitude to show where things are, vectors help the system figure out where each piece of text “lives” in a space of meaning. If two pieces of content have similar coordinates, they are likely talking about the same thing. This helps the system find the most relevant content when someone asks a question.

Vector Stores

A vector store is a special type of database where all these vectors are stored. When someone asks a question, the system searches the vector store to find the most relevant pieces of content. Those are then used to help the AI generate a more accurate response.

Retrieval

Retrieval is the step that happens when someone sends a prompt to the AI. First, your question (prompt) is turned into an embedding just like the documents were. That means the system now has a numeric representation of what you're asking. It then compares that question embedding to the ones in the vector store to find the most relevant matches. These matched documents are pulled out and passed into the AI model, which reads them and uses the content to write a response that directly answers your question. All of this happens in a few seconds.

These parts all work behind the scenes in a RAG setup. You don’t need to build or manage them yourself, but understanding these terms can help you ask the right questions, choose the right tools, and avoid sounding lost when AI comes up in meetings or conversations with your team.

RAG Retrieval

What type of data can be used in a RAG?

A RAG system works best when it has access to the information that matters to your business. That means connecting it to the tools and documents your teams already use every day.

You can use many types of data in a RAG setup. These include PDFs, internal reports, wiki pages, customer support tickets, meeting notes, spreadsheets (like Excel or Google Sheets), presentations (like PowerPoints), product documentation, contracts, and training manuals. Basically, if it’s written down and you want the system to be able to “read” it and use it to answer questions, it can probably be included.

The data doesn’t need to be in one place. You can connect your RAG to files stored in Google Drive or Microsoft SharePoint, or pull from internal wikis like Confluence or Notion. Some teams also use Slack messages or CRM notes. You don’t have to copy and paste everything into a new system. Instead, most RAG platforms let you plug into your existing data sources. Once connected, the system keeps your data searchable and up to date in the background.

This is what makes RAG useful in real-world settings. If your company has a growing list of documents spread across different platforms, you don’t need to reorganize everything. You just need to point the RAG system to the right places. From there, it can start answering questions based on the latest files, no matter where they live.

Practical Applications

Once a RAG system is connected to your company’s data, it can be used across different teams to support everyday work. Whether it’s helping a support agent reply to a ticket, assisting a sales rep during a customer call, or giving a marketer quick access to past campaigns, RAG makes your internal knowledge easier to find and use. Below are a few examples of how RAG systems can be applied in real business settings.

Customer Support

One of the most useful ways to apply a RAG system is in customer support. Instead of relying on your team to search through shared folders, old emails, or internal docs, RAG does the heavy lifting in the background. It quickly finds the most relevant content and suggests accurate responses based on real company knowledge.You can train a RAG using the materials your support team already works with. This includes knowledge base articles, refund policies, internal how-to guides, and your company website. You can also include historical emails with customers. This gives the system context about your policies and products, as well as past conversations. When a customer writes in, the RAG can bring up their previous emails so the agent has the full picture. That helps make the response more accurate and personal.RAG systems can also pull key details from new support tickets, like what the customer is asking, what product it involves, or what kind of issue it is. It then looks for similar past tickets, finds out how those were resolved, and uses that to suggest what to do next. This could include offering a fix, pointing to a relevant policy, or drafting a reply. The human agent still reviews and sends the final response, but the system takes care of most of the research and writing.This leads to faster replies, fewer mistakes, and more consistent communication. It also helps new agents ramp up quickly since the system guides them with past examples and clear suggestions.

Sales

RAG systems are also useful in sales, especially when conversations rely on quick access to accurate, detailed information. Sales reps often need to answer questions about products, pricing, terms, or how your offering compares to a competitor. Instead of digging through folders or asking around, a RAG system can surface the right information in seconds. You can feed a RAG system with internal knowledge files, product catalogs, pricing sheets, terms of service, and sales playbooks. It can also pull in content from your website, like feature pages or FAQs. On top of that, you can include scraped content from the customer’s website, such as what tools they use, who their clients are, or what industry problems they focus on. This gives the system a broader view of the customer and helps your team tailor their messaging. When a sales rep is preparing for a call or responding to a question over email, the RAG can pull together key talking points, past conversations with the same lead, and any relevant material from your internal content. It can suggest answers, propose next steps, or even draft personalized follow-up messages. This helps reps focus on the conversation instead of spending time looking for files or double-checking facts. In short, RAG helps your sales team stay sharp, respond faster, and keep messaging aligned with your actual offerings and terms. It makes prep easier and helps reps speak confidently without needing to memorize everything.

Marketing

Marketing teams deal with a large mix of content, tools, and channels, and keeping everything aligned can be a challenge. A RAG system can help by making internal content searchable and available on demand. Instead of scrolling through folders or asking a colleague for the latest version of a brand deck, marketers can ask the system and get the right material instantly.You can set up a RAG with your brand guidelines, historical campaign assets, blog posts, ad copy, customer personas, and tone-of-voice documents. It can also include slide decks, press releases, SEO briefs, and other materials your team has used in the past. This gives the system a full view of what your brand sounds like, what it’s said before, and what’s already been done.When someone on the team is writing a new post, planning a campaign, or reviewing creative, the RAG can surface past examples, remind them of brand rules, and suggest language that matches your style. It can also help make sure messaging is consistent across teams and channels. If someone needs to respond quickly to a new trend or request, the system gives them a solid starting point based on what’s already worked.RAG doesn’t replace your creative process. It just speeds up the work of finding what you’ve already built and making sure your marketing stays focused, consistent, and on-brand.

Data Security in RAG Systems

When building a RAG system, it's important to understand where your data goes, how it's stored, and when it's shared with external services. Here's a simple breakdown of the two main areas to focus on:

1. How embeddings are created and stored

When you set up a RAG system, your internal documents are turned into embeddings and stored in a vector database. These embeddings are numerical representations of your content, not raw text, but they still reflect the meaning of your data. That makes it important to think about both how they're created and where they're stored.

Start by considering the service you use to create the embeddings. If you're using an external provider like OpenAI or another cloud-based API, your data is sent to their servers during processing. Even if the provider says it doesn't store your data, you need to be clear about where that processing takes place. For companies operating under GDPR or other regional data protection laws, the physical location of the data center matters. You may need to ensure that data stays within the EU or another approved region.

If using an external provider is not a good fit for your company, there are also plenty of options for creating embeddings entirely inside your own environment. Open-source models and self-hosted vector databases are available, and many of them are easy to set up. This gives you full control over both processing and storage without needing to send any data to third-party servers. Next, think about where your vector store is hosted. Some RAG platforms offer their own managed storage, while others let you host the vector database in your own cloud or on-prem environment. If your data includes sensitive, private, or regulated information, it's often safer to use infrastructure you control or a cloud provider that meets your compliance requirements. In either case, you should limit access to only the systems and people who need it.

2. The language model used when asking questions

When a user asks a question, the system first turns that question into an embedding. It then compares that embedding to the ones in your vector store to find the most relevant matches. Only those few matched documents are sent to the language model to generate a response. The model does not have access to your full document set. It only sees the small subset selected for that specific question.

If you are using an external language model, such as OpenAI or Anthropic, those selected documents are sent to the provider's servers for processing. Even though the amount of data is small, it is still leaving your environment, which means data privacy and compliance need to be considered. Most providers offer settings to prevent storage or reuse of that data, but you should confirm those settings are properly configured.

Just like with embedding creation and storage, the physical location of processing is also relevant here. If your company is subject to GDPR or similar laws, you may need to make sure that all model interactions happen within approved regions, such as within the EU. Many providers support this by offering regional data centers, but you need to verify where processing takes place.

If using a public model does not align with your company’s requirements, you can also use a private setup. This might involve hosting the model on your own servers or using a managed service that keeps all processing inside your cloud environment. Tools like Ollama make it easy to run open models such as Meta’s Llama, Mistral, or Deepseek locally, without sending any data to an external provider. This gives you full control over where processing happens and how your data is handled.

By carefully managing both document retrieval and model access, and by making deliberate choices about where processing occurs, you can build a RAG setup that meets your company’s security and compliance standards.

RAGs vs. LLM fine-tuning

If you want to give an AI system access to specialized knowledge, there are two main ways to do it. You can either use RAG or fine-tune a large language model (LLM). Both can work, but they are very different in terms of cost, effort, and flexibility.

Fine-tuning means you train a copy of the model on your own data. That process takes time, requires technical skills, and can be expensive. It also locks that knowledge into the model, so if your information changes, you have to fine-tune again. Fine-tuned models also carry a higher risk of hallucinating, especially if the training data is limited or outdated. The model might generate confident answers that sound right but are incorrect or misleading.

RAG is simpler. It doesn’t change the model. Instead, it pulls in relevant documents and feeds them into the model just before it generates a response. This way, the AI always answers based on your latest information. Because the model has access to actual reference material in real time, the risk of hallucinations is much lower.

Conclusion

RAG is a way to enable a Google-search-like experience with your enterprise data. It’s also a key component in building AI agents that can actually support day-to-day work. You don’t need to be technical to get started, but you do need to understand the basics—how it works, what choices need to be made, and how to keep your data secure.

When done right, RAG helps your teams find answers faster, stay consistent, and get more value from the information your company already has.

Next Article

AI Literacy is NOT Optional

Many companies are investing in AI agents and automation. That matters, but it is only part of the equation. Just as important is how employees are using AI in their everyday work. AI literacy comes down to two practical skills. First, an AI-first mindset, where people start tasks by asking how AI can help. Second, knowing how to write clear and detailed prompts that guide the AI to give better results. Frequent users of AI are already seeing measurable gains. They work faster, get more done, and often take on tasks that would be difficult without AI support. The more experience they build, the more value they get. Upskilling your existing team in these basics is no longer optional. It is a necessary part of staying productive and competitive.

RAG Isn’t Plug-and-Play

RAG systems can help ground AI answers in your own data, but they are not plug and play. Hallucinations still happen, especially when the retrieved content is vague or misleading. A strong RAG setup depends on good source material, thoughtful chunking, and traceable references. Each chunk should make sense on its own and be specific enough to support accurate answers. Metadata helps with filtering, relevance, and trust. Benchmarking the system with known questions and answers is key. It shows whether retrieval is working and helps you catch issues early. There are also technical knobs you can adjust, but the foundation is clear: quality input, careful structure, and regular testing make RAG systems more useful and reliable. RAG can be a powerful tool, but it is not something you set up once and walk away from. It needs thoughtful design, testing, and regular adjustments to be genuinely helpful and reliable.

Understanding the Model Context Protocol (MCP)

The Model Context Protocol (MCP) is an open standard that makes it easier for AI agents to connect with tools, databases, and software systems. Instead of building a separate integration for each service, MCP provides a consistent way for AI to send requests and receive structured responses. It works through a simple client-server model. The AI acts as the client. Each external system runs an MCP server that handles translation between the AI and the tool’s API. This setup lets AI agents interact with systems like CRMs or internal platforms without needing custom code for each one. For developers, MCP reduces integration work and maintenance. For decisionmakers, it means AI projects can move faster and scale more easily. Once a system supports MCP, any compatible AI agent can use it. MCP is still new, but adoption is growing. OpenAI, Google, and others are starting to build support for it. While it is not a shortcut to AI adoption, it does reduce friction. It gives teams a stable way to connect AI with real business systems without reinventing the wheel every time.

AI Agents at Work: How to Stay in Control

Building AI agents that are safe, traceable, and reliable isn’t just about getting the technology right. It’s about putting the right systems in place so the agent can be trusted to do its job, even as its tasks get more complex. Guardrails, benchmarks, lifecycle tracking, structured outputs, and QA agents each play a specific role. Together, they help ensure the agent works as expected, and that you can explain, review, and improve its performance over time. As more teams bring AI into day-to-day operations, these practices are what separate a useful prototype from something that is ready for real business use.

Wait... What's agentic AI?

The article explains the difference between AI agents, agentic AI, and compound AI. AI agents handle simple tasks, agentic AI manages multi-step workflows, and compound AI combines multiple tools to solve complex problems.

AI Agent Fundamentals

Artificial intelligence (AI) agents help businesses by completing tasks independently, without needing constant instructions from people. Unlike simple AI tools or regular automation, AI agents can think through steps, make their own decisions, fix mistakes, and adapt if things change. They use different tools to find information, take actions, or even coordinate with other agents to get complex work done. Because AI agents can handle tasks on their own, they can be useful in areas like customer support, sales, marketing, and even writing software. Platforms that don't require coding make it easier for more people to create and use these agents. Businesses that understand how AI agents differ from simpler AI tools can better plan how to use them effectively, making their operations smoother and more efficient.

Connecting Enterprise Data to LLMs

Many companies are eager to integrate AI into their workflows, but face a common challenge: traditional AI systems lack access to proprietary, up-to-date business information. Retrieval-Augmented Generation (RAG) addresses this by enabling AI to retrieve relevant internal data before generating responses, ensuring outputs are both accurate and context-specific. RAG operates by first retrieving pertinent information from a company's documents, databases, or internal sources, and then using this data to generate informed answers. This approach allows AI systems to provide precise responses based on proprietary data, even if that information wasn't part of the model's original training.

Software Development in a Post-AI World

Heyra uses AI across three key stages of software development: from early ideas to structured product requirements, from product requirements to working prototypes, and from prototypes to production-ready code. Tools like Lovable, Cursor, and Perplexity allow both technical and non-technical team members to contribute earlier and move faster. This speeds up development, improves collaboration, and reshapes team workflows.

Rethinking Roles When AI Joins The Team

AI is changing how work gets done. Instead of replacing jobs, it helps with everyday tasks. Companies are looking for people who can work across different areas and use AI tools well. Entry-level roles are becoming more about checking AI’s work than doing it from scratch. The key is knowing how to ask the right questions and starting small with AI.

.jpg)